Introduction

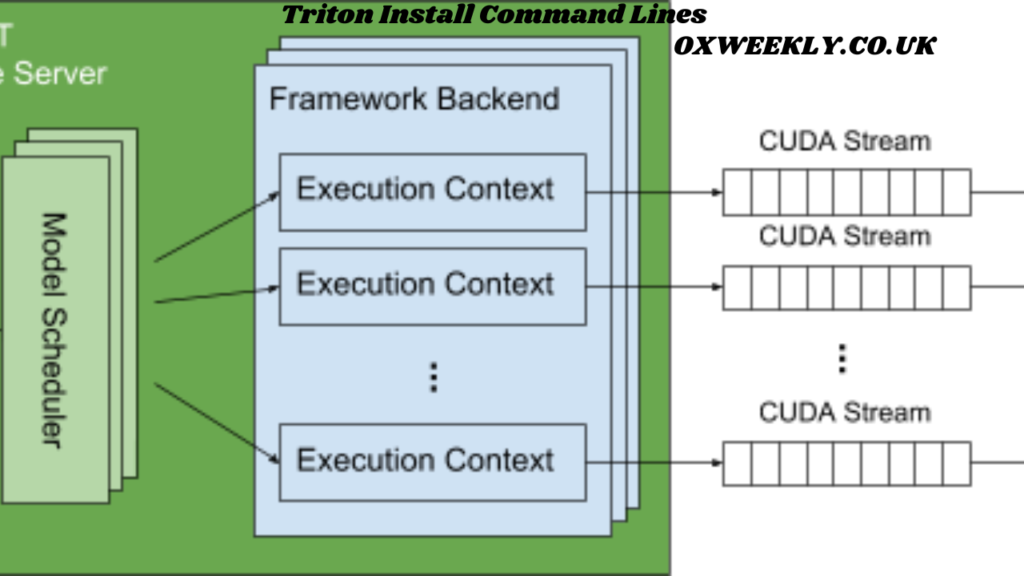

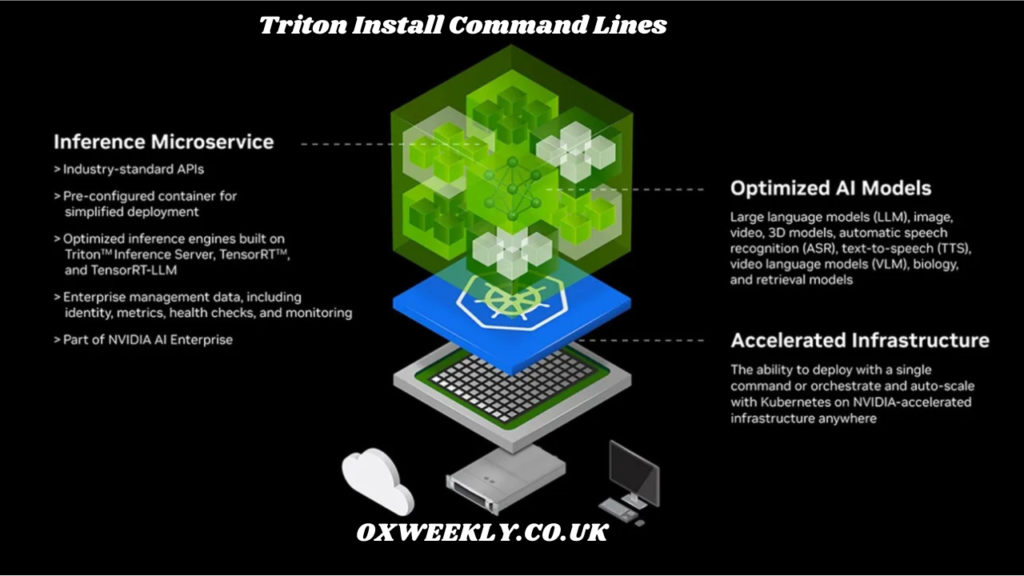

Triton Inference Server is a sophisticated technology created by NVIDIA to accelerate AI model deployment. It supports many frameworks, including TensorFlow, PyTorch, and ONNX, enabling flexibility for developers. Installing Triton Inference Server properly is critical for best performance, and command-line installation enables a simplified and configurable configuration. This article covers Triton install command lines, installation methods, requirements, and troubleshooting procedures to help you set up Triton smoothly.

Prerequisites for Installing Triton Inference Server

Before proceeding with the installation, ensure that your system meets the necessary requirements:

- Operating System: Ubuntu 20.04 or later, or other Linux distributions with Docker support.

- Hardware: NVIDIA GPU with CUDA support (if using GPU acceleration).

- Drivers & Software:

- NVIDIA GPU drivers (latest recommended version).

- NVIDIA Container Toolkit.

- Docker (if using the containerized version of Triton).

- Python 3.x (for local installation and testing).

Having these prerequisites in place will help avoid issues during the installation process.

Installing Triton Inference Server Using Docker

Using Docker is one of the most efficient ways to install Triton, as it provides an isolated environment. Below are the steps for installation:

1. Install Docker

To install Docker, run the following commands:

sudo apt update

sudo apt install -y docker.io

sudo systemctl enable docker

sudo systemctl start dockerTo verify the installation, run:

docker --version2. Install NVIDIA Container Toolkit

For GPU acceleration, install NVIDIA Container Toolkit:

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

sudo curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list -o /etc/apt/sources.list.d/nvidia-docker.list

sudo apt update

sudo apt install -y nvidia-docker2

sudo systemctl restart docker3. Pull the Triton Docker Image

Once Docker and NVIDIA Container Toolkit are installed, pull the Triton image:

docker pull nvcr.io/nvidia/tritonserver:latestThis command downloads the latest version of the Triton Inference Server.

4. Run Triton Server Container

To run Triton using Docker, execute:

docker run --gpus all --rm -p 8000:8000 -p 8001:8001 -p 8002:8002 \

-v /path/to/model/repository:/models nvcr.io/nvidia/tritonserver:latest \

tritonserver --model-repository=/modelsReplace /path/to/model/repository with the actual location of your model repository.

Installing Triton Inference Server Without Docker

If you prefer to install Triton locally without Docker, follow these steps:

1. Install Dependencies

Ensure that your system has the necessary dependencies:

sudo apt update && sudo apt install -y \

build-essential cmake libb64-dev libcurl4-openssl-dev libgoogle-glog-dev \

libgrpc++-dev libprotobuf-dev protobuf-compiler python3 python3-pip2. Clone the Triton Repository

Download the Triton source code using Git:

git clone --recursive https://github.com/triton-inference-server/server.git

cd server3. Build Triton Inference Server

To build Triton from source, use:

mkdir build

cd build

cmake ..

make -j$(nproc)

sudo make installThis process may take some time, depending on your system configuration.

4. Running Triton Locally

Once installed, start Triton with:

tritonserver --model-repository=/path/to/model/repositoryThis will launch the Triton Inference Server using the specified model repository.

Verifying Triton Installation

After installation, verify that Triton is running correctly by checking its status:

curl -v localhost:8000/v2/health/readyIf Triton is running properly, you should receive a response indicating that the server is ready.

Common Troubleshooting Issues

1. Docker Not Recognizing GPU

If Docker does not detect your GPU, check that the NVIDIA Container Toolkit is installed correctly:

docker run --rm --gpus all nvidia/cuda:11.0-base nvidia-smiIf this fails, reinstall the NVIDIA drivers and ensure CUDA is properly set up.

2. Triton Server Fails to Start

If Triton does not start, check the logs with:

docker logs <container_id>Replace <container_id> with the actual ID of your running Triton container.

3. Port Conflicts

If you encounter port conflicts, ensure that no other applications are using ports 8000, 8001, and 8002:

sudo netstat -tulnp | grep 8000Terminate any conflicting processes before restarting Triton.

Conclusion

Triton Inference Server provides an efficient way to deploy AI models at scale. Whether using Docker or a local installation, following the correct Triton install command lines ensures a smooth setup. By adhering to the installation steps and troubleshooting techniques outlined in this guide, you can get Triton running effectively for your AI applications.